On April 10th, 2015, I was fortunate to travel from Hillsboro, Oregon to San Francisco, California especially to take part in the International NASA Space App Challenge hosted at Constant Contact. The challenge was held for two days in 133 cities around the world focusing on 4 themes: Earth, outer space, humans, and robotics.

On the first day, Intel donated Intel Edison platforms and sensor kits to all the participants interested in using them during the challenge and beyond because who said creativity is limited to two days! I had the pleasure of distributing the kits to the participants while getting to know them in the process and get inspired by their backgrounds, motivation, creativity, and excitement. The inspiration and the excitement of the event were not limited to our location. The participants spread it by tweeting about their projects and the event during both days. We even kept in touch via Twitter with the challenge participants in various locations worldwide especially with my colleague Wai Lan who supported the event in NYC and wrote a great blog about his experience there.

On day two of the challenge, the teams presented their projects. The projects were nothing short of amazing. The teams' imagination were limitless and truly, the sky was not the limit. I was particularly happy to learn that the top 2 projects that qualified for the global competition used Intel technology. Team ScanSat and Team AirOS, you rocked!

Team ScanSat:

Team ScanSat members are Anand Biradar (aerospace engineer), Krishna Sadasivam (computer engineer), Sheen Kao (mechanical engineer), and Robert Chen (computer scientist). Their project solved the Deep Space CamSat Challenge. The team came to the event prepared with an idea. They wanted to develop the docking and magnetic propulsion mechanisms for a CubeSat that is docked with a Dragon-sized spacecraft. Utilizing magnetic and ion propulsion, ScanSat should be capable of undocking and re-docking with the main craft autonomously. Since the ScanSat is supposed to be equipped with a camera, it can capture images of the spacecraft as it physically approaches interesting phenomena, as well as perform image processing to analyze the exterior.

When the members arrived at the location, they were pleasantly surprised to find out that Intel was providing hardware. As a result, they were able to expand their original idea of just developing the docking and magnetic propulsion mechanisms of ScanSat to creating an actual demo as a proof of concept. The end result was a stellar. Using the below hardware, they were able to develop the CubeSat which is able to steer in all directions following a red light representing the mothership.

The list of hardware components used for the demo:

- 1 x Intel® Edison with Arduino Breakout Kit

- 1 x Base Shield v2 from the Grove starter kit

- 4 x Grove - Smart Relay

- 1 x Camera

- 1 x DC Motor

- 1 x Lunchbox

- 1 x Tub

- Water

On the software side, they used:

- Python

- OpenCV image processing libraries

- Libmraa for i/o control - https://github.com/intel-iot-devkit/mraa

Source Code:https://github.com/ksivam/scansat

Video Demo: https://www.youtube.com/watch?v=CDbrzUlAxt4

Team AirOS:

Team AirOS members are Patrick Chamelo, Mario Roosiaas, Maria Rossiaas, Karl Aleksander Kongas, David Bradley, Marc Seitz, and Scott Mobley. Their project solved the Space Wearables: Designing for Today's Launch & Research Stars Challenge. Inspired by Star Wars, they developed an augmented reality platform designed for gesture, voice, and maximum awareness of an astronaut's surroundings. It feeds live sensor data into the user's HUD, allows instant video communications with other astronauts, remote teleconferencing, voice control, and AI assistance.

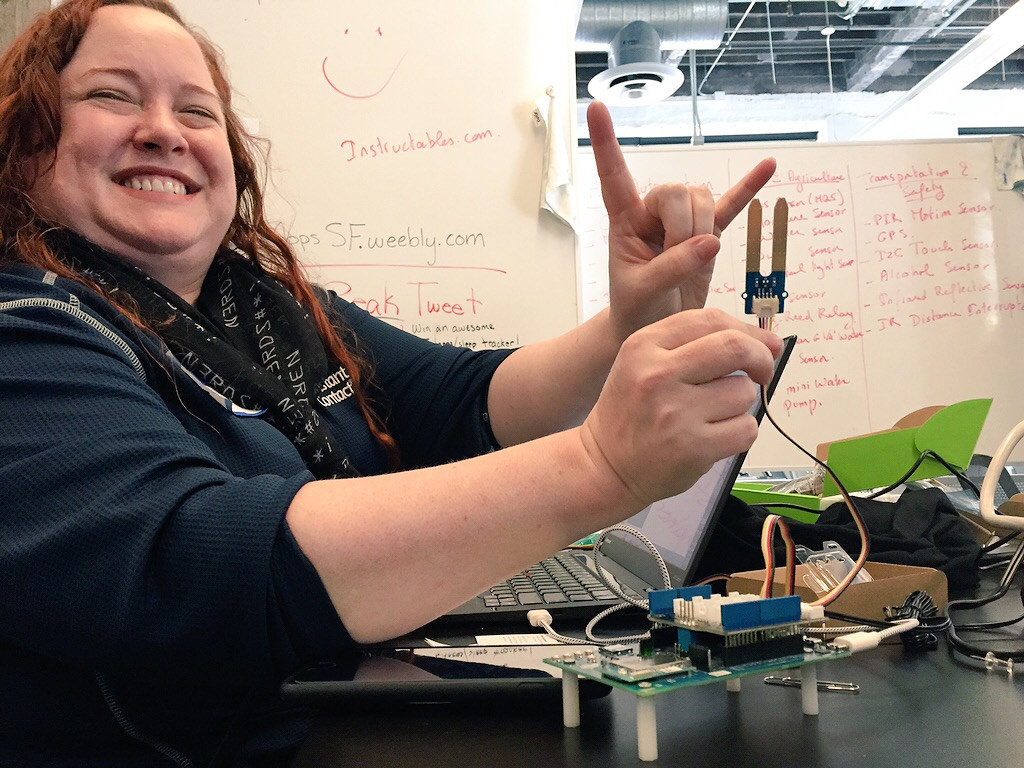

The team was very creative and resourceful by combining multiple technology and tools for the next generation of space wearables. In particular, the project pipes video straight into the Oculus Rift which creates a pseudo augmented reality environment. They also overlay a GUI over the video which gets live temperature updates and detects flames using Intel's Edison. Moreover, it detects gesture controls using the Leap Motion API and takes advantage of IBM's Watson Instant Answers in order to support questions and answer speech to text communications.

I was honored to be one of the first to test AirOS and let me tell you.... It was fascinating, I loved it!!

The list of hardware components used for the demo:

- 1 x Oculus Rift

- 1 x Intel Edison

- 1 x Temperature Sensor

- 1 x Flame Sensor

- 1 x Leap Motion

- 1 x Camera

Source Code: https://github.com/badave/airos.git

Video Demo: https://www.youtube.com/embed/xfqIYuQEkqM

A big THANK YOU to the entire SpaceRocks Apps team who did a FANTASTIC job organizing the event and allowing me to be part of it. I am already counting down the days to the next one.... Is it 2016 yet??!!